Original article available on Sifted. For the German translation, please scroll down.

(EN) While the pace of AI development and adoption has been swift, trust is lagging behind.

A recent study from KPMG that surveyed 48k people across 47 countries found that only 46% of people are willing to trust AI systems, while 70% believe regulation is needed.

Without guidance around quality and assurance, the full potential of AI is unlikely to be realised.

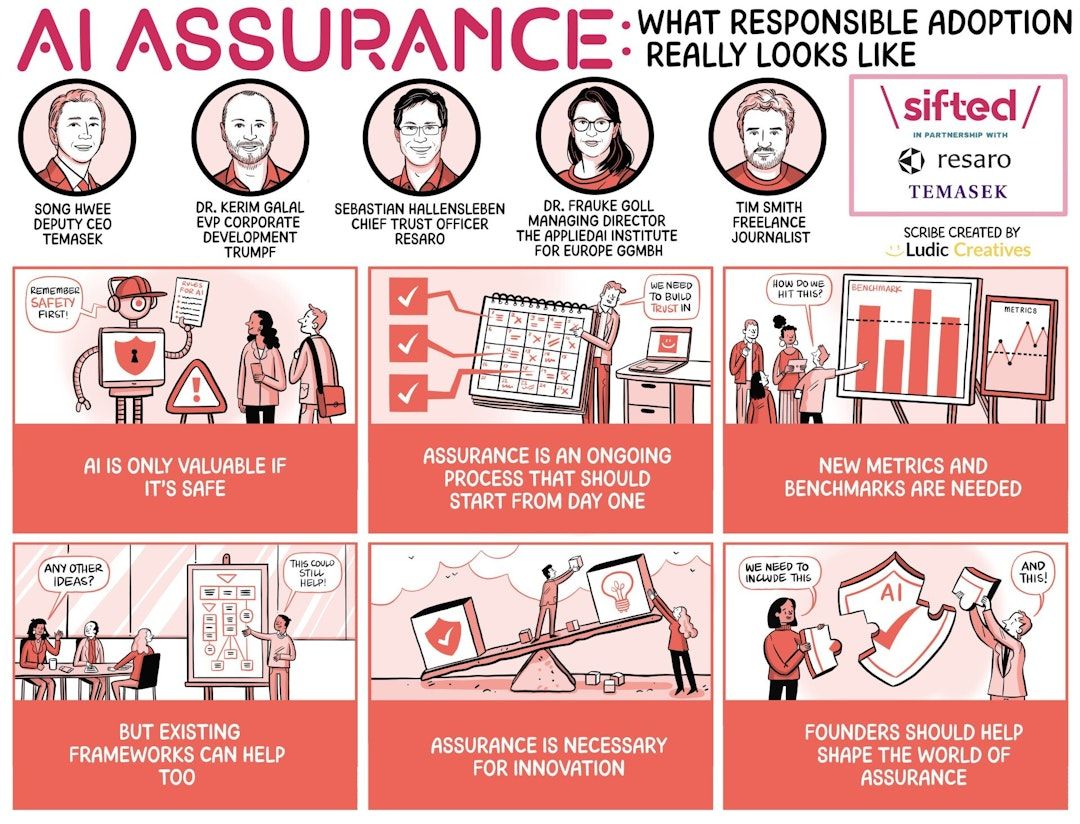

To delve deeper into how startups can find practical pathways for AI assurance in an era of rapid adoption, Sifted sat down with an expert panel.

We discussed the most effective ways to measure and communicate trust in AI systems, how existing frameworks can help validate new AI systems and how the technology poses new questions for mitigating risk.

Participating in the discussion were:

- Song Hwee Chia, Deputy CEO of Temasek, a global investment firm headquartered in Singapore.

- Sebastian Hallensleben, Chief Trust Officer at AI test lab, Resaro.

- Dr Kerim Galal, Executive Vice President at Corporate Development across Strategy, AI, M&A and in-house consulting at German machine tools and laser tech company Trumpf.

- Dr Frauke Goll, Managing Director at the AppliedAI Institute for Europe GmbH.

Here are the key takeaways: